First I had to create some data:

for ((x=0; x<100; x++)) ; do echo "$(dd if=/dev/urandom | tr -d -c "a-z" | dd bs=15 count=1 2>/dev/null)=$(dd if=/dev/urandom | tr -d -c "a-z" | dd bs=10 count=1 2>/dev/null)" | cat >> testData ; done

this block of of code will run a for loop 100 times

within the loop:

- output to the screen randomly generated key(15 chars) value(10 chars) pairs made up of lower case letters

- .take whatever is outputted on the screen and append to a file called testData

At the moment I can run a perf record with the following bash line

perf record redis-cli set foo bar

- this allows me to do a sampling of the command set foo far

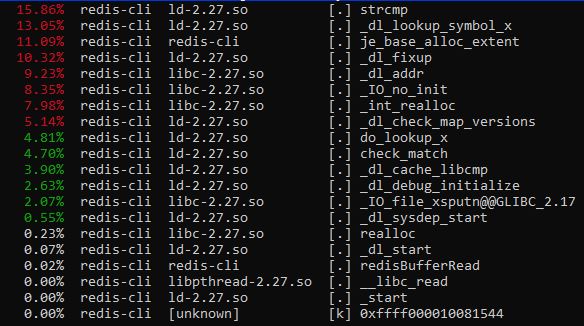

which generates the following report:

Upon further research, Redis actually comes with its own benchmarking tool called redis-benchmark. A great source of documentation published on DigitalOcean can be found here.

An example of a command I ran:

redis-benchmark -t set -n 10000

A simple breakdown of the command

- redis-benchmark - the command

- -t (command)- use a subset of tests specified by command, in this case I'm using set

- -n (number of requests)- number of commands to carry out

The tool is useful because it allows me to track how many seconds it took to complete each operation for x amount of requests, which in this case it to set 10000 key value pairs.

The command also allows users to specify how big each randomly generated block size (for the key/value pairs) should be(default 3), to ensure consistency between operations. I also am not only limited to just benchmarking the set only, I can specify in the CLI set,get and redis-benchmark will then also benchmark how long it took to get x amount of values.

No comments:

Post a Comment